Solving a real problem using our model: The Discrete Flashing Ratchet (DFR)#

The following is adapted from [BCS+24] and shows a step by step example for solving problems using our trained model from Hugging Face. This notebook is a detailed example of solving a physics problem using our model using the following approach:

Understanding the problem

Loading and understanding the data

Using our model to infer the transition rates

Using the inferred transition rates to solve the original problem

In statistical physics, the ratchet effect refers to the rectification of thermal fluctuations into directed motion to produce work, and goes all the way back to Feynman [FLSH65].

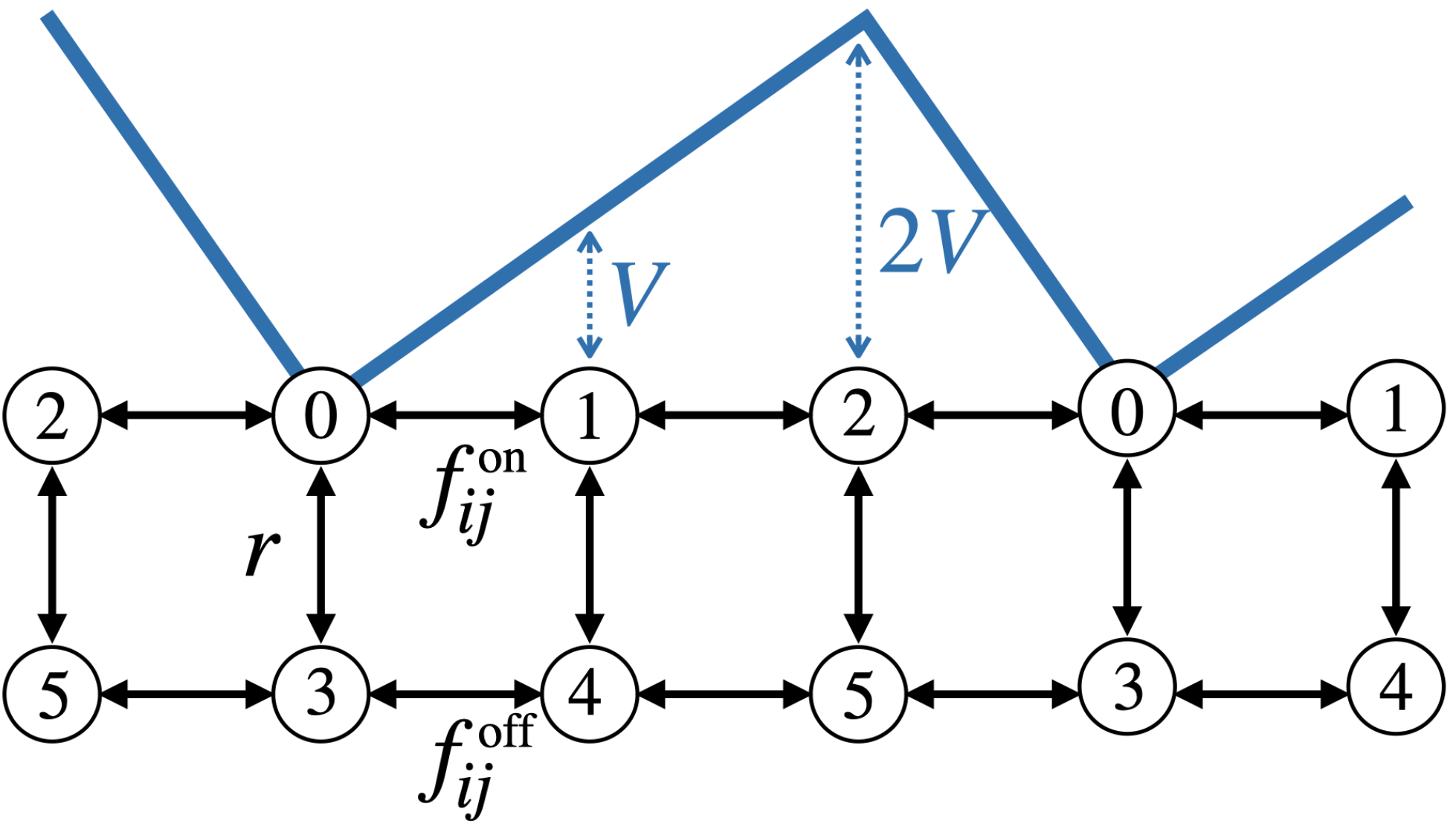

Here we consider a simple example thereof, in which a Brownian particle, immersed in a thermal bath at unit temperature, moves on a one-dimensional lattice. The particle is subject to a linear, periodic and asymmetric potential of maximum height \(2V\) that is switched on and off at a constant rate \(r\). The potential has three possible values when is switched on, which correspond to three of the states of the system. The particle jumps among them with rate \(f_{ij}^{\tiny{\text{ on}}}\).

When the potential is switched off, the particle jumps freely with rate \(f_{ij}^{\tiny{\text{ off}}}\).

We can therefore think of the system as a six-state system, as illustrated here:

Similar to [RoldanP10], we now define the transition rates as

Given these specifics, we consider the parameter set \((V, r, B) = (1, 1, 1)\)

together with the dataset simulated by [SSanchez23],

So given our data, we want to recover the theoretical transition rates, as a q-matrix.[1]

import numpy as np

np.set_printoptions(linewidth=100)

rates_on=np.array([[np.exp(-0.5*(j-i))-1*(i==j) for j in range(3)] for i in range(3)])

rates_off=np.ones(shape=(3,3))

np.fill_diagonal(rates_off, 0)

id3=np.eye(3)

q_matrix=np.zeros(shape=(6,6))

q_matrix[:3,:3]=rates_on

q_matrix[3:,3:]=rates_off

q_matrix[3:,:3]=id3

q_matrix[:3,3:]=id3

diagonal=-np.sum(q_matrix,axis=1)

np.fill_diagonal(q_matrix, diagonal)

q_matrix

array([[-1.9744101 , 0.60653066, 0.36787944, 1. , 0. , 0. ],

[ 1.64872127, -3.25525193, 0.60653066, 0. , 1. , 0. ],

[ 2.71828183, 1.64872127, -5.3670031 , 0. , 0. , 1. ],

[ 1. , 0. , 0. , -3. , 1. , 1. ],

[ 0. , 1. , 0. , 1. , -3. , 1. ],

[ 0. , 0. , 1. , 1. , 1. , -3. ]])

Loading and exploring the data#

We start by loading the simulated data from Hugging Face:

# Loading the Discrete Flashing Ratchet (DFR) dataset from Huggingface

from datasets import load_dataset

import torch

data = load_dataset("FIM4Science/mjp", download_mode="force_redownload", trust_remote_code=True, name="DFR_V=1")

data.set_format("torch")

Repo card metadata block was not found. Setting CardData to empty.

The model will later use the ‘observation_grid’, ‘observation_values’, ‘seq_lengths’ features to estimate the transition rates.

Here the observation grid is constant over all paths, consisting of 100 evenly spaced points on \([0,1]\). The sequence lengths of the dataset is therefore just 100 for all paths.

data["train"]["observation_grid"][0,0,:,0]

tensor([0.0000, 0.0101, 0.0202, 0.0303, 0.0404, 0.0505, 0.0606, 0.0707, 0.0808,

0.0909, 0.1010, 0.1111, 0.1212, 0.1313, 0.1414, 0.1515, 0.1616, 0.1717,

0.1818, 0.1919, 0.2020, 0.2121, 0.2222, 0.2323, 0.2424, 0.2525, 0.2626,

0.2727, 0.2828, 0.2929, 0.3030, 0.3131, 0.3232, 0.3333, 0.3434, 0.3535,

0.3636, 0.3737, 0.3838, 0.3939, 0.4040, 0.4141, 0.4242, 0.4343, 0.4444,

0.4545, 0.4646, 0.4747, 0.4848, 0.4949, 0.5051, 0.5152, 0.5253, 0.5354,

0.5455, 0.5556, 0.5657, 0.5758, 0.5859, 0.5960, 0.6061, 0.6162, 0.6263,

0.6364, 0.6465, 0.6566, 0.6667, 0.6768, 0.6869, 0.6970, 0.7071, 0.7172,

0.7273, 0.7374, 0.7475, 0.7576, 0.7677, 0.7778, 0.7879, 0.7980, 0.8081,

0.8182, 0.8283, 0.8384, 0.8485, 0.8586, 0.8687, 0.8788, 0.8889, 0.8990,

0.9091, 0.9192, 0.9293, 0.9394, 0.9495, 0.9596, 0.9697, 0.9798, 0.9899,

1.0000])

The observation values contain the state of the processes for all paths and all time points.

Warning

In practice these labels will rarely be directly observed values, since those will, in most cases, have to be computed as part of the preprocessing.

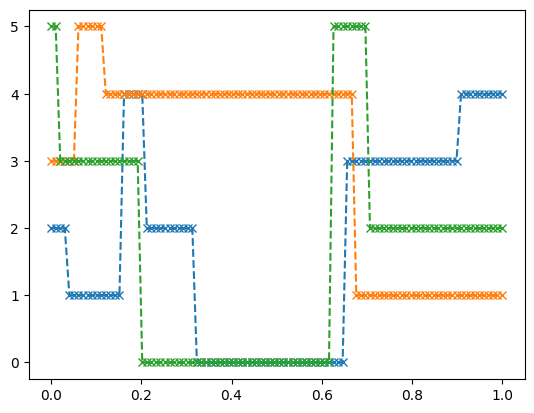

We can visualize the first 3 paths to get a feeling for the processes:

import matplotlib.pyplot as plt

ts=data["train"]["observation_grid"][0,0,:,0]

three_paths=data["train"]["observation_values"][0,:3,:,0]

for i in range(3):

plt.plot(ts,three_paths[i],"x--")

plt.show()

Inferring transition rates#

To infer the \(q\)-matrix we first load our trained model:

from transformers import AutoModel

from fim.trainers.utils import get_accel_type

device = get_accel_type()

fimmjp = AutoModel.from_pretrained("FIM4Science/fim-mjp", trust_remote_code=True)

fimmjp = fimmjp.to(device)

fimmjp.eval()

FIMMJP(

(gaussian_nll): GaussianNLLLoss()

(init_cross_entropy): CrossEntropyLoss()

(pos_encodings): DeltaTimeEncoding()

(ts_encoder): RNNEncoder(

(rnn): LSTM(8, 256, batch_first=True, bidirectional=True)

)

(path_attention): MultiHeadLearnableQueryAttention(

(W_k): Linear(in_features=512, out_features=128, bias=False)

(W_v): Linear(in_features=512, out_features=128, bias=False)

)

(intensity_matrix_decoder): MLP(

(layers): Sequential(

(linear_0): Linear(in_features=2049, out_features=128, bias=True)

(activation_0): SELU()

(linear_1): Linear(in_features=128, out_features=128, bias=True)

(activation_1): SELU()

(output): Linear(in_features=128, out_features=60, bias=True)

)

)

(initial_distribution_decoder): MLP(

(layers): Sequential(

(linear_0): Linear(in_features=2049, out_features=128, bias=True)

(activation_0): SELU()

(linear_1): Linear(in_features=128, out_features=128, bias=True)

(activation_1): SELU()

(output): Linear(in_features=128, out_features=6, bias=True)

)

)

)

As noted in [BCS+24][2] it suffices to look at a small context window of 300 paths with 50 observation values each. We therefore infer the transition rates batchwise to demonstrate how little data might be needed.

# copy data to device

batch = {k: v.to(device) for k, v in data["train"][:1].items() if not k in ["intensity_matrices","adjacency_matrices","initial_distributions"]} # data without any information, we seek to find

n_paths = 300

total_n_paths = batch["observation_grid"].shape[1]

statistics = 50

outputs=[]

with torch.no_grad():

for _ in range(statistics):

paths_idx = torch.randperm(total_n_paths)[:n_paths]

mini_batch = batch.copy()

mini_batch["observation_grid"] = batch["observation_grid"][:, paths_idx]

mini_batch["observation_values"] = batch["observation_values"][:, paths_idx]

mini_batch["seq_lengths"] = batch["seq_lengths"][:, paths_idx]

outputs.append(fimmjp(mini_batch, n_states=6)["intensity_matrices"])# We are currently not interested in variances or initial conditions

mean_rates=torch.mean(torch.stack(outputs,dim=0),dim=0)[0].numpy()

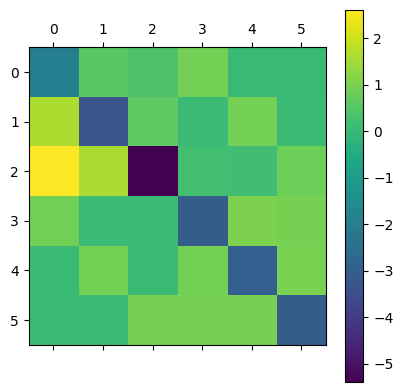

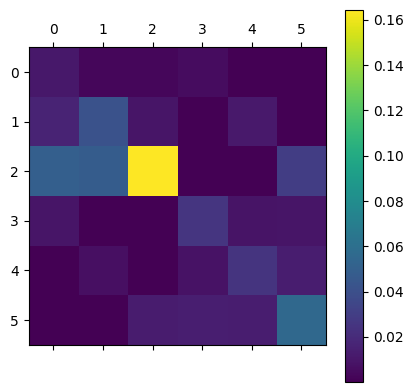

This results in the following inferred transition rates:

mean_rates=torch.mean(torch.stack(outputs,dim=0),dim=0)[0].numpy()

print(mean_rates)

plt.matshow(mean_rates)

plt.colorbar()

plt.show()

[[-1.9280841 0.56053436 0.36148313 0.9309061 0.03605918 0.03910116]

[ 1.6167166 -3.274249 0.61205477 0.06211518 0.92600745 0.05735496]

[ 2.6117969 1.5938491 -5.3943353 0.18486184 0.15495166 0.8488762 ]

[ 0.90593773 0.05441669 0.06852371 -3.0303988 1.0427814 0.95873964]

[ 0.0479439 0.9295158 0.06597461 0.95244545 -3.0012662 1.0053865 ]

[ 0.07696303 0.05660908 0.97233003 0.9779892 0.9574757 -3.0413673 ]]

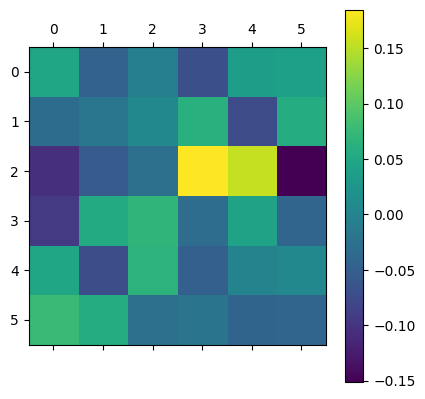

and the following variances:

variances=torch.var(torch.stack(outputs,dim=0),dim=0)[0].numpy()

print(variances)

plt.matshow(variances)

plt.colorbar()

plt.show()

[[1.04813026e-02 2.61373702e-03 2.66511622e-03 5.38172154e-03 2.58207365e-05 2.75323437e-05]

[1.66382026e-02 4.17281911e-02 9.40872636e-03 7.85213269e-05 1.14657814e-02 7.28697487e-05]

[4.98300157e-02 4.80452850e-02 1.64404795e-01 6.07619993e-04 3.84297455e-04 3.02018654e-02]

[9.53640509e-03 5.70461816e-05 1.07989734e-04 2.50910278e-02 8.49042926e-03 9.58491582e-03]

[2.99498206e-05 7.01158447e-03 4.41958036e-05 7.87997153e-03 2.49586962e-02 1.31381685e-02]

[1.25027917e-04 9.77873715e-05 1.22721968e-02 1.39522720e-02 1.31875239e-02 5.53975105e-02]]

Using the previously computed theoretical transition rates we can look at the pointwise error:

error=mean_rates-q_matrix

print(error)

plt.matshow(error)

plt.colorbar()

plt.show()

[[ 0.04632597 -0.0459963 -0.00639631 -0.06909388 0.03605918 0.03910116]

[-0.03200465 -0.01899715 0.00552411 0.06211518 -0.07399255 0.05735496]

[-0.10648497 -0.05487221 -0.02733217 0.18486184 0.15495166 -0.15112382]

[-0.09406227 0.05441669 0.06852371 -0.03039885 0.04278135 -0.04126036]

[ 0.0479439 -0.07048422 0.06597461 -0.04755455 -0.00126624 0.00538647]

[ 0.07696303 0.05660908 -0.02766997 -0.0220108 -0.04252428 -0.04136729]]

Inferring the parameters#

We will now use the transition rates to recover the parameters used to generate the dataset. Recall the formula we previously used to calculate the theoretical transition rates:

The \(q\)-matrix (transition rate matrix) is therefore given by

since the transition rates between \((i,j,\text{on})\) and \((i,j,\text{off})\) (and vice versa) are given by the parameter \(r\).

This system is evidently overdetermined. For simplicity we assume normally distributed independent errors, which results in the following MLEs for each of the parameters:

We can easily recover \(b\) using the diagonal:

lower_matrix=mean_rates[3:,3:]

np.fill_diagonal(lower_matrix, 0)

B_hat=lower_matrix.sum()/6

B_hat

np.float32(0.98246956)

Similarly \(r\) describes the transition rates from a state \((i,j,\text{on})\) to \((i,j,\text{off})\) and vice versa:

r_hat=sum([mean_rates[i,i+3]+mean_rates[i+3,i] for i in range(3)])/6

r_hat

np.float32(0.91892886)

And \(V\) can be recovered using \((q_{i,j})_{i,j=1}^3\):

V_hat=(-2*(np.log(mean_rates[0,1])+np.log(mean_rates[1,2]))+2*(np.log(mean_rates[1,0])+np.log(mean_rates[2,1]))-np.log(mean_rates[0,2])+np.log(mean_rates[2,0]))/6

V_hat

np.float32(1.0017122)

Which results in the following inferred parameters:

(V_hat,r_hat,B_hat)

(np.float32(1.0017122), np.float32(0.91892886), np.float32(0.98246956))

Bibliography#

David Berghaus, Kostadin Cvejoski, Patrick Seifner, Cesar Ojeda, and Ramses J Sanchez. Foundation inference models for markov jump processes. In The Thirty-eighth Annual Conference on Neural Information Processing Systems. 2024. URL: https://openreview.net/forum?id=f4v7cmm5sC.

Richard P Feynman, Robert B Leighton, Matthew Sands, and Everett M Hafner. The feynman lectures on physics; vol. i. American Journal of Physics, 33(9):750–752, 1965.

É. Roldán and J. M. R. Parrondo. Estimating dissipation from single stationary trajectories. Physical review letters, 105 15:150607, 2010.

Patrick Seifner and Ramsés J Sánchez. Neural markov jump processes. In International Conference on Machine Learning, 30523–30552. PMLR, 2023.