Imputation for Dynamical Systems: A crash course#

This tutorial serves as an introduction to the paper [SCKS25] and its accompanying code. This page shortly explains the paper and the usage of our trained models. The next pages describe the features seen here in more detail and will allow you to use our models on your own data!

Introduction#

Dynamical systems are mathematical systems that change with time according to a fixed evolution rule, and serve as representational and analytical tools for

phenomena which generate patterns that change over time.

Very often, the recorded changes of these empirical patterns are such that they can be viewed as occurring continuously in time, and thus can be represented mathematically by systems whose evolution rule is defined through differential equations.

Dynamical systems governed by ordinary differential equations (ODEs) correspond to an important subset of these models,

and describe the rate of change of a single parametric function

\(\mathbf{x}: \mathbb{R}^+ \rightarrow \mathbb{R}^D\),

which represents the state of the (\(D\)-dimensional) system, as time evolves, by means of a vector field

\(\mathbf{f}: \mathbb{R}^+ \times \mathbb{R}^D \rightarrow \mathbb{R}^D\).

In equations, we write

We consider the general problem of imputing missing values in time series data, recorded from some empirical process \((\mathbf{y}^*: \mathbb{R}^+ \rightarrow \mathbb{R}^D)\) whose dynamics are assumed to be governed by some unknown ODE. In other words, we assume that both available and missing values in the series \(\mathbf{y}^*(\tau_1), \dots, \mathbf{y}^*(\tau_l)\) correspond to the values taken by the solution \(\mathbf{x}(t)\) of some hidden ODE, at the observation times \(\tau_1, \dots, \tau_l\), potentially corrupted by some noise signal of which only a few statistics are known. Therefore, the goal is to infer the ODE solution \(\mathbf{x}(t)\) that best interpolates the noisy time series \(\mathbf{y}^*(\tau_1), \dots, \mathbf{y}^*(\tau_l)\) and hence imputes its missing values.

In lieu of training one complex model on a single empirical process, we trained a neural recognition model offline to infer a large and varied set of ODE solutions \(\mathbf{x}(t)\), from a synthetic dataset that is composed of noisy series of observation on those solutions, displaying different missing value patterns.

Two formulations of imputation problems#

The classical formulation of the imputation problem typically involves different missing patterns and we will focus on two of them here. The first one is the so-called point-wise missing pattern, where individual vectors in the series randomly lack some of their components. The second one is the temporal missing pattern, where certain components of the vectors in the series are missing over consecutive observation times. To handle them, we make the following two simple assumptions.

for the point-wise missing pattern, we assume one can always find a certain time scale \(\tau_{\small\text{ simple}}\), or some (sequential) subset of observations, for which the best interpolating ODE solution is “simple”. Furthermore, we assume that the set of all such simple parametric functions can be well-represented by a heuristically constructed synthetic distribution.

for temporal missing pattern, assume the time series featuring temporal missing patterns

involve more complex interpolating functions, meaning that no such \(\tau_{\small\text{ simple}}\) is to be found in this case. Although more complex in nature, we assume that these functions are locally “simple”, and that they often exhibit generic secular and seasonal structures, which encode important information about the missing values and can be well-represented by a second, synthetic distribution over parametric functions.

These two problem setting are solved by two different models, called \(\texttt{FIM-}\ell\) and \(\texttt{FIM}\), which we will use in the next pages to solve some toy example problems!

We can see this distinction in action in the following code snippets, which will sketch the usage of these two models on some simple dataset.

Warning

Since this page is only meant to sketch the capabilities of the models, we import the somewhat lengthy functions prepare_data and prepare_data_base. These can found in the repo or slightly adapted the later pages

Similar to the MJP model, we first import some classes from the FIM library and load the model from Hugging face.

Note

In practice, you probably want to use your GPU for most computations. To do so you need to put both the tensors containing the data and the model on the device, similarly to the MJP case, since our model supports torch syntax, you can simple use mode.to(device) with the appropriate device!

from tutorial_helper import prepare_data_base, visualize_prediction

from fim.models.imputation import FIMImputationWindowed

from datasets import load_dataset

import torch

import warnings

warnings.filterwarnings("ignore")

model = FIMImputationWindowed.from_pretrained("FIM4Science/fim-windowed-imputation")

base_model=model.fim_imputation.fim_base

We will now load some toy data and impute random missing values:

data = load_dataset("FIM4Science/roessler-example", download_mode="force_redownload", name="default")["train"]

data.set_format("torch")

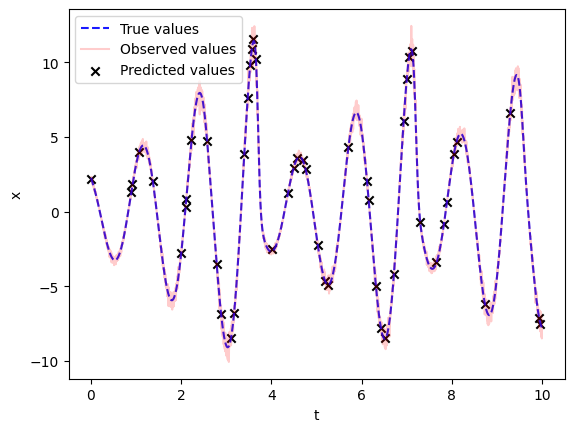

For the point-wise missing data, we access the base model and use it to predict the missing values, i.e. the values at the indices where the mask tensor has the value True. To showcase this we manually flip some of the values of the mask tensor:

ts=data["t"][:].reshape(1,4096,1)

observed_x=data["x"][:].reshape(1,4096,1)

observed_v=data["x_prime"][:].reshape(1,4096,1)

multiplicative_error=(1+torch.normal(0,0.05,size=(1,4096,1)))

After which we use the prepare_data_base function to format the data for the base model \(\texttt{FIM-}\ell\). We then call \(\texttt{FIM-}\ell\) on the created data to get the imputed values:

mask = torch.ones_like(ts)*0.01

mask = torch.bernoulli(mask).type(torch.bool)

batch_x=prepare_data_base(ts,observed_x*multiplicative_error,mask=mask, num_windows=32)

base_model.eval()

with torch.no_grad():

rtn=base_model(batch_x)

prediction_x = rtn["visualizations"]["solution"]["learnt"]

visualize_prediction(ts.flatten(),observed_x.flatten(),(observed_x*multiplicative_error).flatten(),prediction_x.flatten(),mask=mask.flatten())

See the next chapter to see how we can use the \(\texttt{FIM-}\ell\) model to infer the dynamic at play in detail!

Similarly, we can use the \(\texttt{FIM}\) model to impute values in the case of a temporal missing pattern i.e. when values are missing over a longer timeframe for which we no longer assume a simple structure of the underlying dynamic. This part can be found here.

Bibliography#

Patrick Seifner, Kostadin Cvejoski, Antonia Körner, and Ramses J Sanchez. Zero-shot imputation with foundation inference models for dynamical systems. 2025. URL: https://openreview.net/forum?id=NPSZ7V1CCY.